JimEJamm: Computers, Cars, Catholicism.

-

Intro to HammerDB

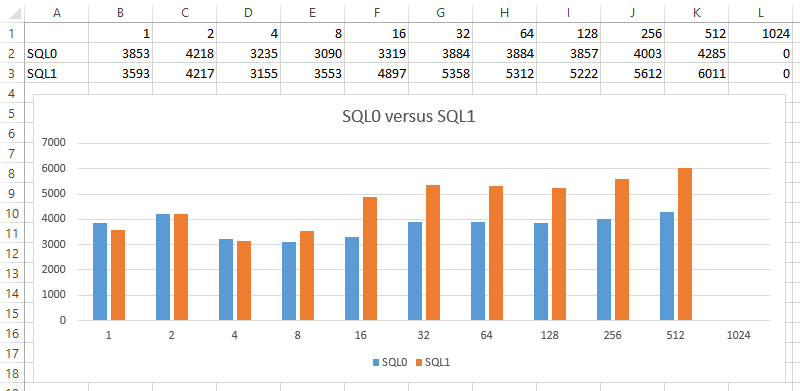

One of my favorite benchmarking tools is HammerDB. In a nutshell, it allows you to run a well evolved SQL benchmark (TPC-C) against your system to gauge performance. The scenario to picture is an order/warehouse simulator and your metric is how many new orders per minute (NOPM) the system can crank out. CPU, memory, disk all matter […]

-

DSQuery

Using DSQuery to disable user accounts after inactivity period Query AD for users that have been inactive for 13 weeks dsquery user “CN=Users,DC=Domain,DC=local” -inactive 13

-

IOPS calculations

Read and Write IOPs Total the read and write IOPs and calculate the number of recommended spindles. For example, 10K RPM spindles can be assumed to support 130 IOPs, and 15K spindles can be assumed to support 180 IOPs. The total Backend IOPs workload is a function of the front-end (host) workload and the RAID […]

-

Hyper-V Server 2012 R2 links

Sign up for a copy through Microsoft evaluations to get online video courses (example), virtual labs, white papers, … etc. Download ISO TechNet details TechNet forum TechNet blog

-

NTP tips

Checking your time against a reference NTP server w32tm /stripchart /computer:pool.ntp.org /samples:5 /dataonly Checking time across multiple systems using ‘psexec’ (from Sysinternals.com) psexec \\computer1,computer2,computer2 w32tm /stripchart /computer:pool.ntp.org /samples:5 /dataonly Setting the time services to use NTP and server NTP requests (for all AD servers). Step 1: W32tm /config /syncfromflags:manual /manualpeerlist:pool.ntp.org /reliable:yes /update W32tm /resync Step 2: [HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\W32Time\Config] […]

-

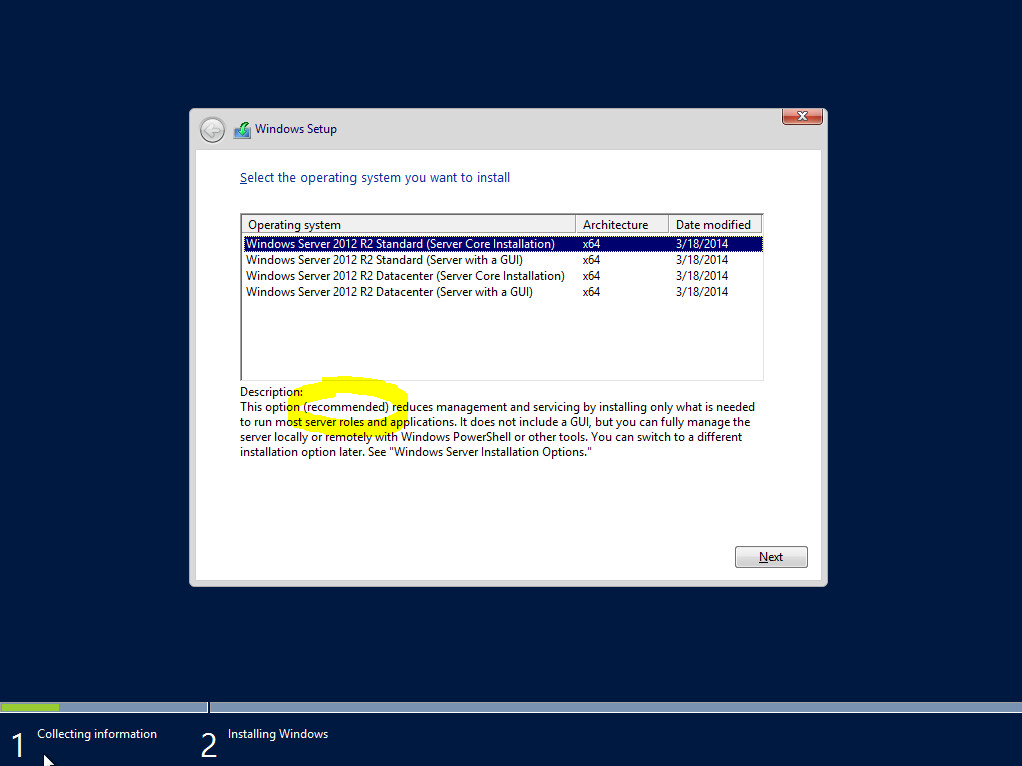

Core versus Full – Initial install quick stats

Created two VM’s with identical resources. On the first VM, Windows 2012 R2 Core Installation was chosen. On the second VM, Windows 2012 R2 Server with a GUI (Full) was chosen. After installation of both, I also ran updates on both until no more Windows updates were found. Here are the quick stats: Core has […]

-

Project Bookshelf

Project Bookshelf: The events depicted in this story are fictional. Any similarity to any person living or dead is merely coincidental. (Moe) Team, let’s get these books off the floor by putting up a bookshelf on that nice sturdy wall over there. (Kirk & Guy) Sounds great! (Kirk) We found a bookshelf that should fit. […]

Got any book recommendations?